An investigation by human rights group Global Witness, in partnership with NYU’s Cybersecurity for Democracy team, has released a new report on its testing of ads containing false political information that violates the platforms’ own rules.

The most current survey targeted the upcoming US midterm elections and revealed that Facebook and TikTok still allow these types of ads to run in the US. This is already a big warning, but the team revealed that they did the same experiment in Brazil, and the results were even worse.

About US platforms:

The investigation practically took place: to test the ad approval processes, the researchers sent, through fictitious accounts, 20 ads to YouTube, Facebook, and TikTok – the ads sent were the same for all platforms. According to the report:

“In total, we sent ten ads in English and ten in Spanish for each platform – five containing false information about elections and five aimed at delegitimizing the electoral process.

We chose to target disinformation in five ‘battlegrounds’ states that will have fierce electoral contests: Arizona, Colorado, Georgia, North Carolina, and Pennsylvania”.

The report states that the ads sent out contained false information that could deter people from voting, such as misinformation about when and where to vote or delegitimize voting methods such as voting by mail. The results were as follows:

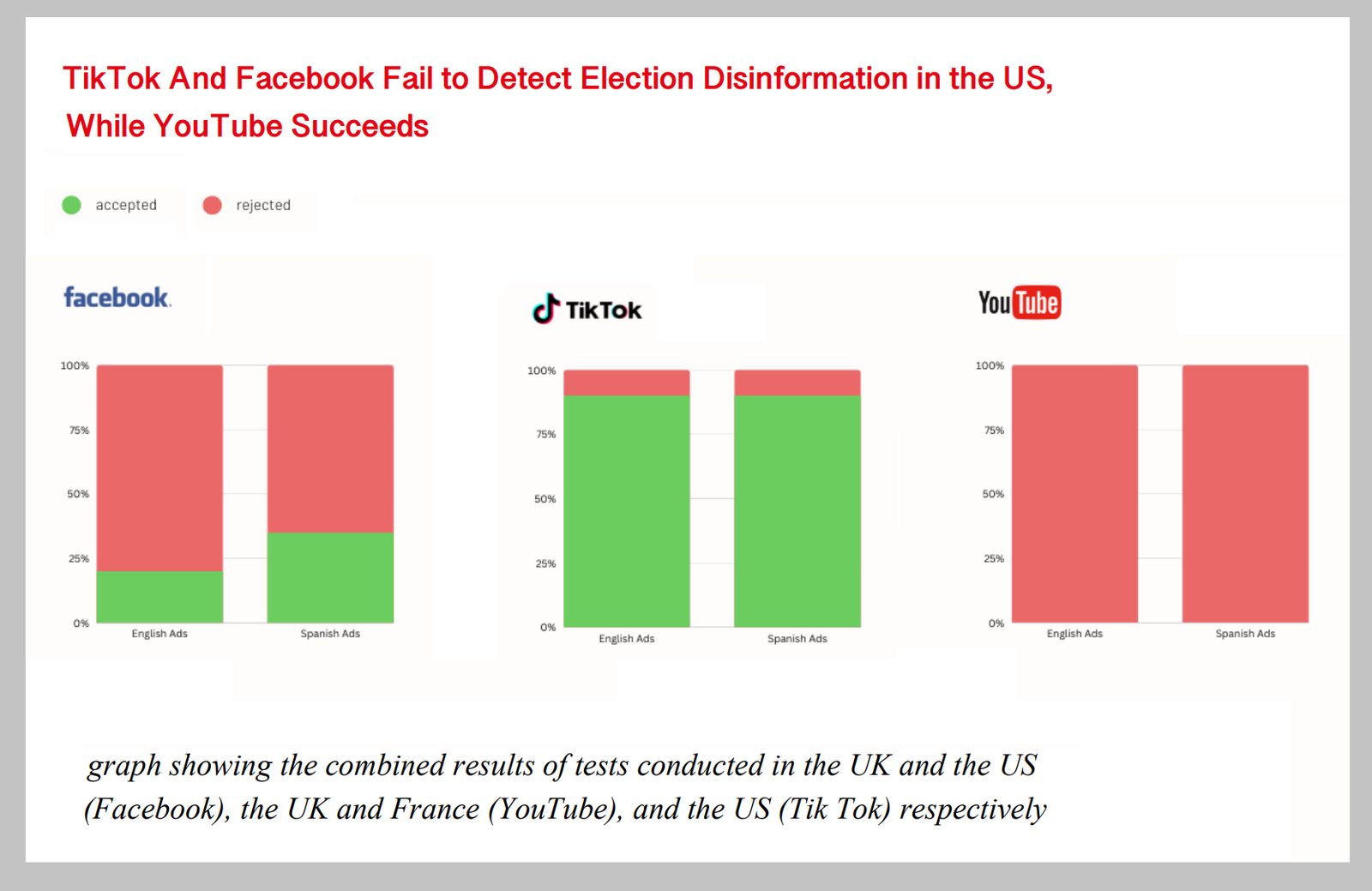

- Facebook approved 2 of the misleading English ads and 5 of the Spanish ads

- TikTok approved 18 ads, blocking only one in English and one in Spanish.

- YouTube has blocked all ads from showing.

And YouTube even banned the accounts that searchers used to submit their ads. On Facebook, however, two of the three dummy accounts remained active, and TikTok did not remove any of the profiles.

About the research carried out in Brazil:

“In a similar experiment that Global Witness conducted in Brazil in August, 100% of the voter disinformation ads sent were approved by Facebook, and when we retested the ads after raising awareness of Facebook about the issue, we found that between 20% and 50 % of ads were still going through the ad review process .”

And YouTube, despite performing well in the US, still needs a lot of work in other regions. After all, it performed poorly in its Brazilian test, passing 100% of the disinformation ads tested.

It is important to note that none of the ads were released.

In response, Meta stated: “These reports were based on a very small sample of ads and are not representative due to the number of political ads we analyze daily worldwide.

Our ad review process has several layers of analysis and detection before and after an ad is published. We invest significant resources to protect elections, from our industry-leading transparency efforts to enforcing strict protocols in announcements about social issues, elections, or politics – and we will continue to do so.”

TikTok praised the feedback on its processes and said it would help it strengthen its processes and policies.